The Best Way To Change A Vocalist With AI in 2026

I tested two methods for changing a song's vocalist with AI: Suno's persona feature vs RVC pre-processing. Here's what actually works and what wastes your time.

Posted by

Related reading

Why AI Vocals Don't Match the Original Singer

The real problem with AI lyric swaps isn't robotic vocals - it's tonal mismatch. Learn practical fixes including the sibilance hack, RVC limitations, and the artistic rewrite mindset.

One of the most common questions I get from clients at Music Made Pro is how to change the vocalist on an AI-generated song. They want their own voice or a specific singer instead of whatever Suno or Udio spit out.

I ran an experiment to test two different approaches. One is simple and fast. The other requires more technical work but promises closer voice matching. Spoiler: the easy method wins for most use cases.

The Two Methods I Tested

Method 1: Suno Persona Only - Upload your song, create a persona from 30 seconds of target vocal, and let Suno do the heavy lifting. Fast, straightforward, less control.

Method 2: RVC Pre-Processing - Isolate the lead vocal, convert it using a trained RVC model to sound like the target singer, then feed that converted audio to Suno. More steps, theoretically better voice matching.

The hypothesis was that giving Suno an input that already sounds closer to the target voice would produce better results. Turns out that hypothesis was wrong.

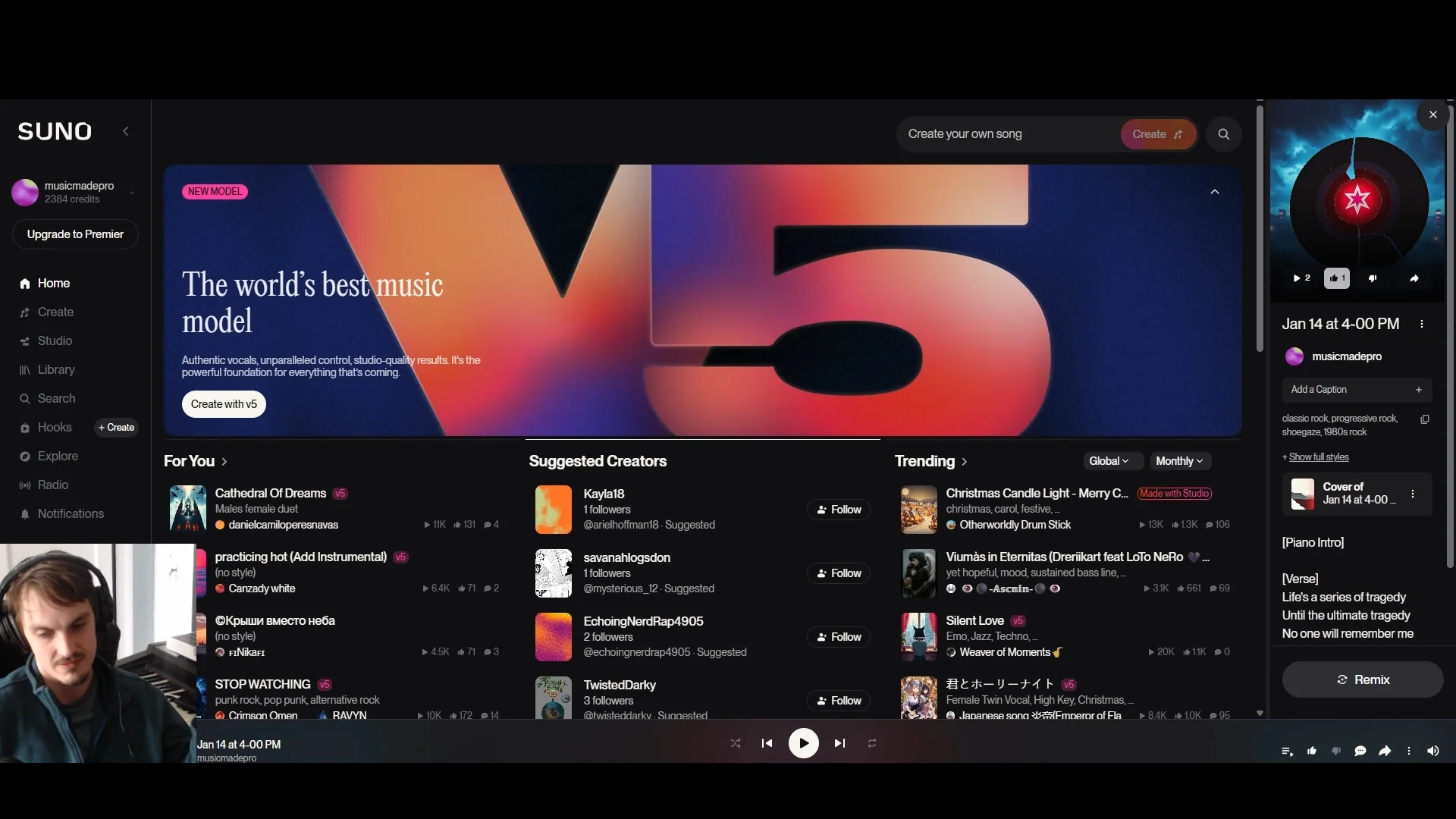

Method 1: The Simple Suno Persona Approach

This is the workflow I use most often. Upload your master audio to Suno, verify the lyrics are correct (Suno messes these up sometimes), then create a persona from reference audio.

For the persona, you want about 30 seconds that show the singer's dynamic range. Pick a section that goes from quiet to loud. This gives Suno more data points to work with.

I set the style influence LOW because we want Suno referencing the audio heavily. Same with the "weirdness" setting. The goal is reproduction, not creativity.

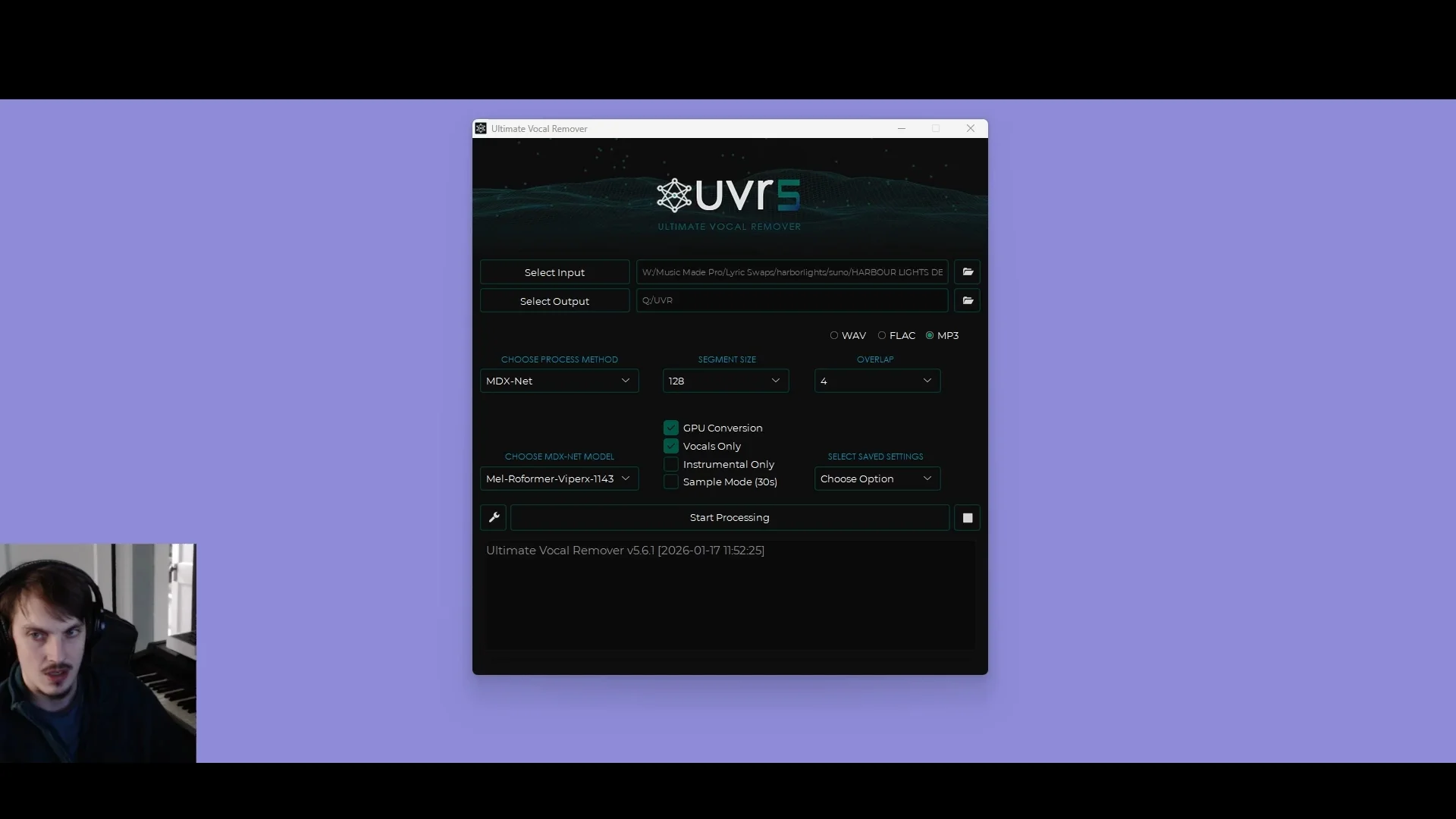

Generate multiple variations. I typically run four at a time. Then isolate the vocals using Ultimate Vocal Remover with the Reformer Viper X model and comp the best takes back into the original instrumental.

Method 2: The RVC Pre-Processing Approach

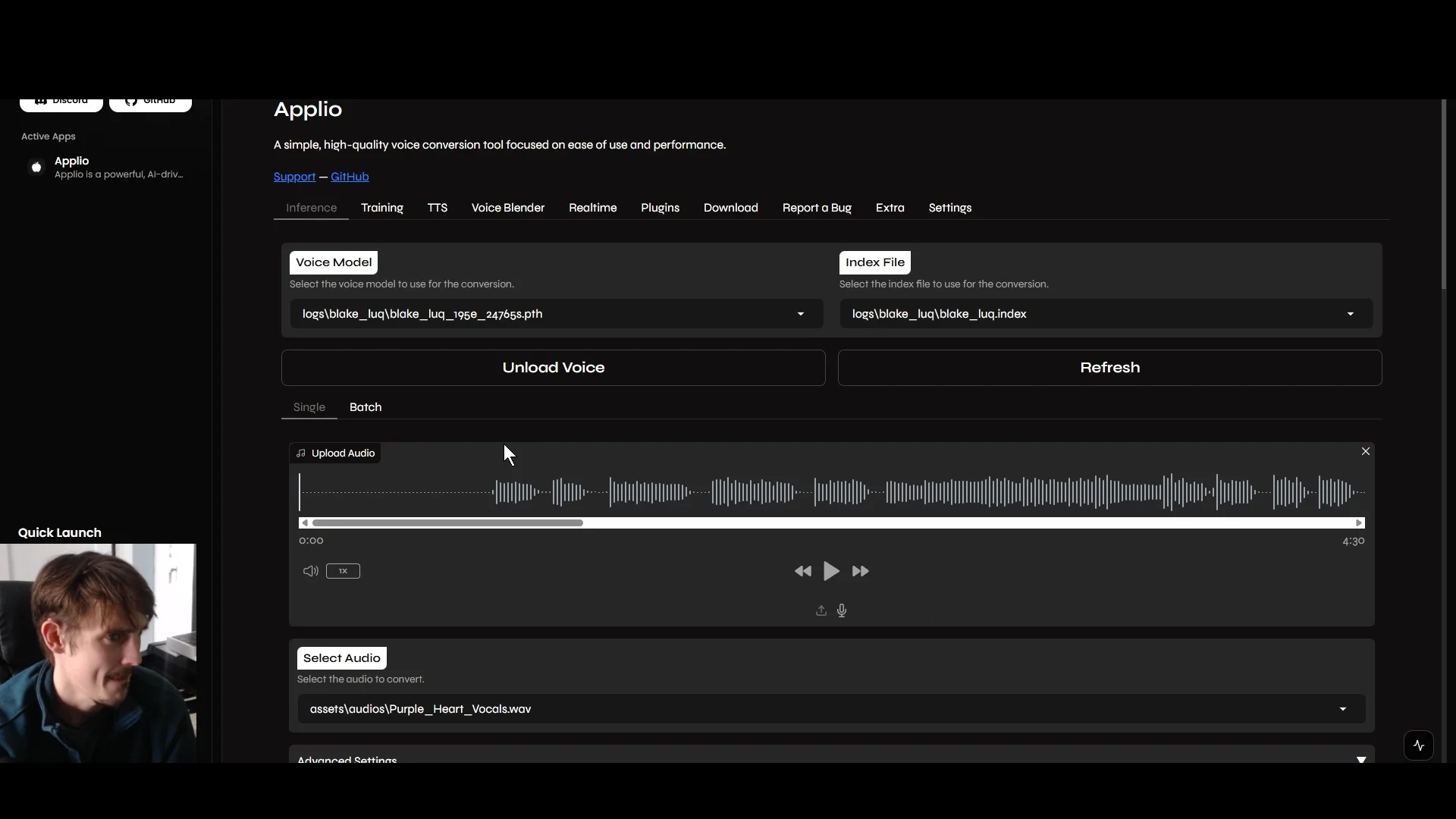

RVC stands for Retrieval-based Voice Conversion. The idea is to train a model on the target singer's voice, then convert the original vocal to sound like them before feeding it to Suno.

First, isolate the lead vocal. I recommend LALAL.AI for this, or Ultimate Vocal Remover if you prefer a local tool.

For RVC training, I tested two platforms. Weights.gg is beginner-friendly and cloud-based. For local training, I used Dione to run Applio locally. Dione makes the setup much easier than installing Applio from scratch. I ran about 195-200 epochs of training.

After conversion, I did some EQ matching and comped out the worst artifacts. Then uploaded that processed audio to Suno with the same persona settings as Method 1.

The Results: Method 1 Wins

For most use cases, the Suno-only approach produced better results out of the box. Here is the breakdown:

| Criteria | Suno Persona Only | RVC Pre-Processing |

|---|---|---|

| Production Quality | Better | Degraded (artifacts) |

| Voice Match Accuracy | ~90% | Closer match |

| Workflow Effort | Low | High |

| Polishability | Radio-ready possible | Difficult |

| Recommended | Yes (most cases) | Only for extreme voice changes |

The RVC method did produce a closer voice match. The tradeoff is that it introduces minor sample rate distortion that trained sound engineers will notice. Some of this distortion may not be fixable in post, so you need to decide if the voice match is worth the quality tradeoff.

Why RVC Requires More Work

In this test, when I fed RVC-processed audio to Suno, the minor sampling artifacts from the voice conversion carried through to the output. Suno did not clean them up in my case. Some of these artifacts cannot be fully removed in post.

There was also a training data mismatch problem. The RVC model struggled because the vocal delivery style in the song differed from the training samples. RVC works best when the performance style matches what the model learned.

If you want to understand more about why AI vocals sometimes miss the mark, I wrote about why AI vocals fail to match the original singer in depth.

Ready to Transform Your First Song?

Join hundreds of music producers who are using ChangeLyric.

✓ Free trial available ✓ No content moderation ✓ Cancel anytime

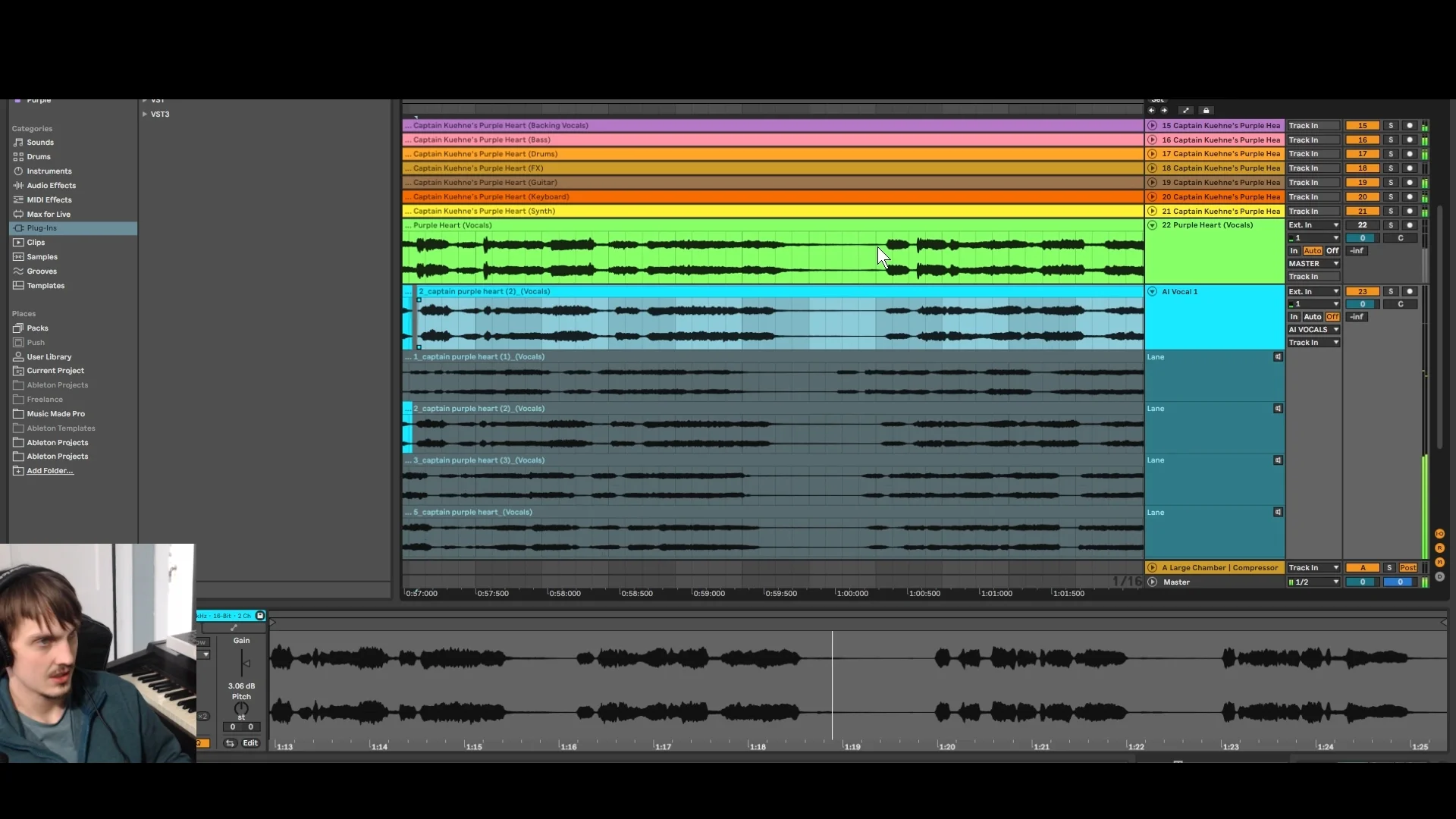

Comping Is Essential Either Way

Regardless of which method you use, comping is non-negotiable. Suno does not nail every section, just like a real singer does not nail every take.

Generate multiple variations. Extract the vocals from each. Line them up in your DAW (Suno outputs often misalign with the original timing). Pick the best phrases from each take.

This is the same process I use for 600+ lyric swap projects. Five okay takes blended together consistently beats one "perfect" take that does not quite match.

When RVC Might Actually Make Sense

The RVC approach is not completely useless. Consider it when:

- You need a significant vocal change (different gender, very different voice type)

- Suno's persona matching fails completely

- Exact voice match matters more than production quality

- You have extensive, high-quality training data

- You are willing to accept quality tradeoffs and do extensive mixing work

If you do use RVC, train with more data and more epochs than I did in this test. Ensure the training data matches the performance style you need. And consider using the RVC output as a blend layer rather than a full replacement.

One thing I found: RVC will glitch out on certain phrases. When working on a full song, I ended up using some Suno-generated vocal samples to patch over the parts where RVC sounded bad. So even with the RVC approach, you will likely need Suno or Udio inpainting to fix problem sections.

My Recommended Workflow for 2026

Based on this experiment and hundreds of client projects, here is the workflow I recommend:

Step 1: Upload your song and verify the lyrics. Suno makes transcription errors. Fix them before processing or you will sing the wrong words.

Step 2: Create a persona from 30 seconds of your target singer. Pick a section showing dynamic range.

Step 3: Set style influence low. Set weirdness low. Manually set the correct gender.

Step 4: Generate four variations.

Step 5: Extract vocals using Ultimate Vocal Remover or LALAL.AI.

Step 6: Comp the best takes back into the original instrumental. Align timing as needed.

Step 7: If specific sections still sound off, regenerate just those sections rather than the whole song.

Tools Mentioned

- Suno AI - Vocal generation with persona feature (primary tool)

- LALAL.AI - Vocal isolation, recommended for clean separation

- Ultimate Vocal Remover - Local vocal isolation, Reformer Viper X model

- Weights.gg - Cloud RVC training, beginner friendly

- Dione - Local AI music tools platform, easiest way to run Applio

- Applio - Local RVC training engine (run it through Dione)

Bottom Line

For most vocalist replacement projects, skip the RVC pre-processing step. It adds work and the results need more mixing to sound polished.

The simple approach wins: Suno persona with good reference audio, multiple generations, and careful comping. This produces deliverable results with a fraction of the effort.

Save RVC for cases where voice matching is critical and you can live with potential artifacts that may not be fixable. For everything else, trust the straightforward workflow and iterate until it sounds right.

If you also need to change the lyrics alongside the vocalist, that is a separate process. Check out ChangeLyric for lyric swapping, but know that vocalist replacement and lyric replacement are two different workflows.

Copyright Reminder

Commercial rights from AI platforms only apply to ORIGINAL songs they generate. Modifying copyrighted songs gives you ZERO commercial rights to the result. The original copyright holder maintains all rights. Personal use exists in a legal gray area. Users are responsible for understanding applicable laws.

Frequently Asked Questions

Use Suno's persona feature with 30 seconds of target vocal reference audio. Set style influence low, generate multiple variations, then comp the best takes back into your instrumental. Skip RVC pre-processing for most projects - it adds quality problems that Suno makes worse.

It depends on your priorities. RVC produces a closer voice match, but introduces minor sample rate artifacts that Suno can amplify. The result requires more mixing work to polish. Worth it if exact voice matching matters more than quick turnaround.

Suno AI for vocal generation with personas, and either LALAL.AI or Ultimate Vocal Remover for vocal isolation. If you want to try RVC, weights.gg is beginner-friendly for cloud training, or use Dione to run Applio locally.

At least four variations. Suno doesn't nail every section, so you need options for comping. Extract vocals from each generation and pick the best phrases to assemble your final vocal track.

In my testing, yes. The minor sampling artifacts from RVC carried through to the final output. Your results may vary depending on training quality and settings. Some artifacts cannot be fixed in post.

Need Help With Your Project?

If you would rather have someone handle the vocalist replacement for you, I offer done-for-you services through Music Made Pro. Same workflow, professional results, no learning curve.

Visit Music Made Pro